The Chinese AI startup DeepSeek has surprisingly risen the ranks to overtake ChatGPT in the Apple App Store. For regular users, R1 is on par with OpenAI’s o1 model and you don’t need to pay a subscription to use it.

It also differentiates itself by being open source and capable of running locally, but let’s be honest: the number of people who use it this way is probably quite limited.

Offering high-end LLMs at a fraction of the cost to developers, it’s an interesting alternative to more established AI providers. But its rise also raises some very fundamental questions about AI governance, data security, and compliance-perhaps the increasingly critical concerns for businesses that rely on AI.

Who Cares About AI Trust? Developer vs. CTO Perspective

The importance of AI trust and security varies depending on one’s organisational role.

It’s a terrible generalisation, but the typical factors a developer considers are usability, affordability, and technical flexibility: if an AI tool is quick, affordable, and works in a stack, that’s often sufficient. Of course, privacy and security matter – but maybe not as first-order decision-making factors when choosing models.

On the other hand, CTOs, CISOs, and CIOs have to look beyond functionality: they are responsible for regulatory compliance, data protection, and long-term risk. Enterprise-level AI adoption requires assurances about data, auditability, and legal accountability. These factors become even more significant in highly regulated industries – such as financial services or healthcare.

This dichotomy is part of the very reason why a framework for governing AI, much like Salesforce’s Einstein Trust Layer, is gaining so much importance.

What Is the Salesforce Einstein Trust Layer?

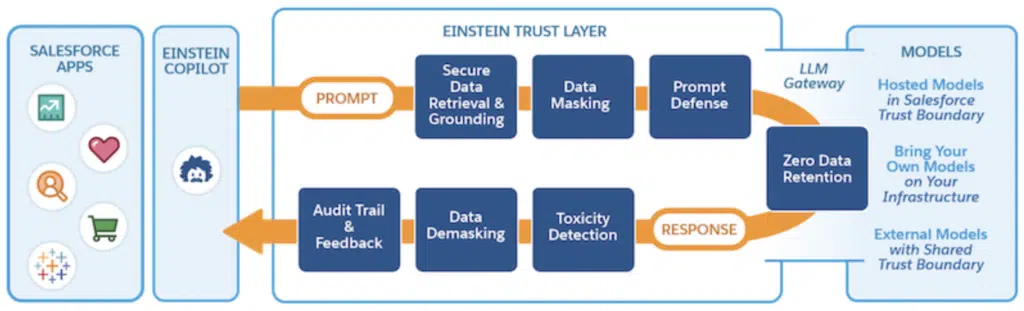

Through the Einstein Trust Layer, Salesforce has advanced further into a prominent position in AI governance. The Trust Layer assists with:

- Data Masking: It finds sensitive information and masks it before sending it to LLM models, reducing exposure.

- Data Grounding with Secure Data Retrieval: AI-generated responses are dynamically grounded in user-accessible data to preserve role-based security controls.

- Zero Data Retention Policy: No customer data is retained with third-party large language models beyond the immediate processing of a request.

- Toxicity Scoring: Detects inappropriate or harmful content both in prompts and outputs.

- Prompt Defense: Help limit hallucinations and decrease the likelihood of unintended or harmful outputs.

- Auditability: Interactions with an AI model are logged for compliance and oversight purposes.

AI use within Salesforce is thus secure without increasing the exposure to compliance risks or data misuse.

What Are the Current Limitations?

Despite all this, the Einstein Trust Layer has some drawbacks. A few factors need consideration regarding the effectiveness from what we’ve seen so far:

- Data Masking Coverage: While effective, data masking is not applied universally across all text fields, and pattern recognition varies by language and region. It’s currently not applied at the Agent layer (so only at the prompt and features layers.)

- Toxicity Detection Gaps: Not all languages are supported equally, and certain language patterns may avoid detection.

- Dynamic grounding pulls data from your org, so it’s essential to keep your data current, accurate, and complete.

- Zero Data Retention: Currently limited to Open AI and Azure Open AI.

- Reliance on Data Cloud: Features such as audit logging and object grounding require Data Cloud, which is an extra cost.

- Staging Environments: LLM Data Masking configuration in Einstein Trust Layer Setup, Grounding on Objects, and logging/reviewing audit and feedback data in Data Cloud are not available for testing in staging environments.

- Sandbox Limitations: Most of the security features cannot be fully tested in non-production environments, thus making pre-deployment validation a bit more challenging (Features that require Data Cloud aren’t available for testing in sandboxes.)

- Feature Availability: Some advanced features, like semantic retrieval, are not 100% there yet.

- Pattern-based masking relies on recognising specific patterns or using ML to identify sensitive data. This method appears to be solid, but it’s not always 100% accurate.

- ISV Availability: If you’re an ISV partner – sorry, Einstein Trust Layer is not packageable.

The Need for a More Transparent and Secure AI Future

It goes without saying that the industry needs powerful and affordable AI models. However, functionality cannot be the only base for enterprise adoption; trust, compliance, and security cannot be sacrificed. As LLMs continue to evolve, so do the mechanisms that keep them secure, reliable, and aligned with regulatory guidelines. The challenge for AI providers will be balancing innovation with trust, enabling organisations to harness the power of AI without giving up one piece of data integrity or compliance. At Aquiva Labs, we’ve worked with Salesforce customers and partners to get them up and running on Agentforce, as well as helping them with a broader AI strategy. Let’s connect and discuss how we can reduce your time to market and do it in a secure, scalable way.

Have Questions? Get in Touch Below!

Written by:

Greg Wasowski

SVP, Consulting and Strategy