As a passionate ChatGPT enthusiast, I’ve always been intrigued by the potential of harnessing its power to transform existing applications. One day, I had an epiphany: why not take a deep dive into the realm of Salesforce AppExchange and breathe new life into my old app, Formula Debugger?

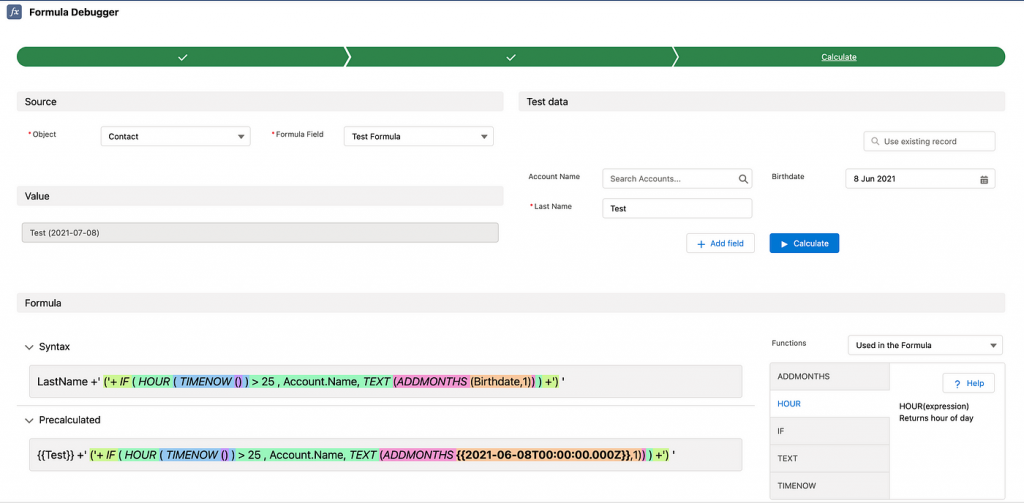

Formula Debugger began its journey as a straightforward tool for testing and debugging formula fields in Salesforce. However, my vision was to infuse it with the cutting-edge capabilities of OpenAI’s ChatGPT, creating a more comprehensive and insightful solution for analyzing and optimizing formula fields.

To add an extra layer of excitement, I challenged myself to generate as much new code as possible using ChatGPT. This creative endeavor would not only test the limits of the AI’s abilities but also demonstrate how it could aid developers in building more advanced and intelligent applications.

Join me as I recount my thrilling adventure of integrating ChatGPT into a Salesforce AppExchange product, and witness how the fusion of human ingenuity and artificial intelligence can elevate an application to new heights.

Setting up OpenAI API integration

In order to integrate OpenAI’s ChatGPT API into your AppExchange application, you’ll need to set up Remote Site Settings and Custom Metadata. These configurations are essential to enable a secure connection between Salesforce and OpenAI’s API, as well as to store and manage the required settings for the integration.

Remote Site Settings

Remote Site Settings are necessary to allow Salesforce to make outbound calls to the OpenAI API. In our development, we’ve created a Remote Site Setting with the endpoint https://api.openai.com. This ensures that Salesforce can communicate with OpenAI’s API without any security constraints.

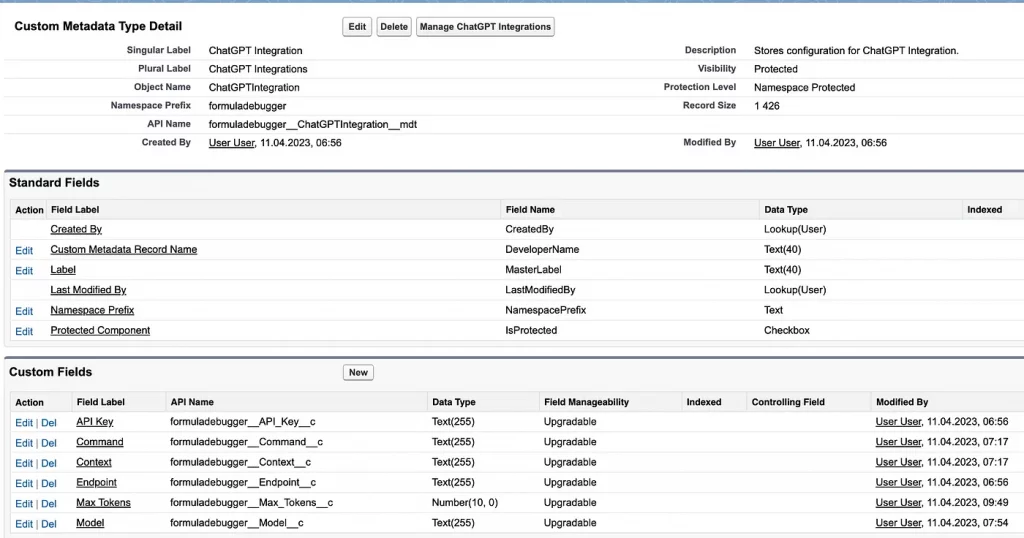

Custom Metadata

We’ve also created Custom Metadata called “ChatGPTIntegration” with several fields:

Endpoint: Stores the OpenAI API endpoint, which ishttps://api.openai.com/v1/chat/completions.API_Key: Stores the API key required for authenticating with the OpenAI API.Max_Tokens: Stores an integer value that represents the maximum number of tokens allowed in the API response.Context: A string used to provide the context for the OpenAI API call.Command: A string used to represent the command or query that you want ChatGPT to understand and respond to.

The Context and Command fields are particularly important, as they help in sending OpenAI calls to get meaningful responses for your FormulaDebugger.

Obtaining an API Key from OpenAI

To get an API key from OpenAI, follow these steps:

- Sign up for an OpenAI account or log in to your existing account.

- Visit the API Keys section of your account dashboard.

- Generate a new API key or use an existing one.

Once you have the API key, add it to the API_Key field in the Custom Metadata configuration.

Apex Classes for OpenAI Integration

In order to integrate OpenAI’s ChatGPT API into the FormulaDebugger AppExchange application, we’ve created three Apex classes: FormulaInsightsController, FormulaInsightsMock, and FormulaInsightsControllerTest. Each of these classes plays a crucial role in handling API calls, mocking API responses, and testing the integration.

FormulaInsightsController

FormulaInsightsController is responsible for making API calls to the OpenAI API and processing the response. This class fetches the necessary data from Custom Metadata, such as the endpoint, API key, context, and command, before sending requests to the ChatGPT API. Upon receiving a response, the controller processes it and returns meaningful insights to the FormulaDebugger application.

public with sharing class FormulaInsightsController {

@AuraEnabled(cacheable=true)

public static String getInsights(String formula) {

ChatGPTIntegration__mdt integration = ChatGPTIntegration__mdt.getInstance('OpenAI');

if (integration == null) {

return 'Error: ChatGPTIntegration configuration not found';

}

if (FormulaFunctionsCtrl.getConfiguration().showFormulaInsights == false) {

return 'Error: FMA for Insights not enabled';

}

Http http = new Http();

HttpRequest request = new HttpRequest();

HttpResponse response;

request.setEndpoint(integration.Endpoint__c);

request.setMethod('POST');

request.setHeader('Content-Type', 'application/json');

request.setHeader('Authorization', 'Bearer ' + integration.API_Key__c);

// Set maximum timeout

request.setTimeout(120000);

String content = integration.Context__c +' '

+ String.escapeSingleQuotes(formula)+' '

+ integration.Command__c;

String payload = '{"model": "'+integration.Model__c

+'", "max_tokens": '+ integration.Max_Tokens__c

+', "messages": [{"role": "user", "content": "'

+ content + '"}]}';

request.setBody(payload);

try {

response = http.send(request);

if (response.getStatusCode() == 200) {

return response.getBody();

} else {

return 'Error: ' + response.getStatus();

}

} catch (Exception e) {

return 'Error: ' + e.getMessage();

}

}

}

FormulaInsightsMock

FormulaInsightsMock is an Apex class that implements the HttpCalloutMock interface. This class simulates API responses during test executions, ensuring that we can run tests without making actual API calls to OpenAI. ChatGPT generated the entire content of this class, which showcases its capability to create relevant mock responses for testing purposes.

@isTest

global class FormulaInsightsMock implements HttpCalloutMock {

global HttpResponse respond(HttpRequest req) {

HttpResponse res = new HttpResponse();

res.setHeader('Content-Type', 'application/json');

res.setStatusCode(200);

res.setBody('{"id": "chatcmpl-abcdefg123456", "object": "chat.completion", "created": 1681152783, "model": "gpt-3.5-turbo-0301", "usage": {"prompt_tokens": 0, "completion_tokens": 0, "total_tokens": 0}, "choices": [{"index": 0, "message": {"role": "assistant", "content": "This is a mock response from the ChatGPT API."}, "finish_reason": "stop"}]}');

return res;

}

}

FormulaInsightsControllerTest

FormulaInsightsControllerTest is an Apex test class designed to test the functionality of the FormulaInsightsController. It uses the FormulaInsightsMock class to simulate API responses and test various scenarios to ensure that the controller is working as expected. ChatGPT generated this test class in its entirety, demonstrating its ability to generate comprehensive test cases that cover various scenarios.

@isTest

private class FormulaInsightsControllerTest {

@isTest

static void testGetInsights() {

// Set up the mock for the API call

Test.setMock(HttpCalloutMock.class, new FormulaInsightsMock());

Test.startTest();

// Call the getInsights method with a sample message

String formula = 'IF ( ( CHANNEL_ORDERS__Renewal_Date__c - TODAY () ) <= 45 && ( ( CHANNEL_ORDERS__Renewal_Date__c - TODAY () ) > 0 ), IMAGE ("/resource/CHANNEL_ORDERS__uilib/images/warning_60_yellow.png", "warning", 16, 16) & \' Ends in \' & TEXT ( CHANNEL_ORDERS__Renewal_Date__c - TODAY ()) & \' Days\', null)';

String insights = FormulaInsightsController.getInsights(formula);

Test.stopTest();

// Assert that the insights are not empty or an error message

System.assertNotEquals(null, insights, 'Insights should not be null');

System.assert(insights.length() > 0, 'Insights should not be empty');

System.assert(!insights.startsWith('Error:'), 'Insights should not start with "Error:"');

}

}

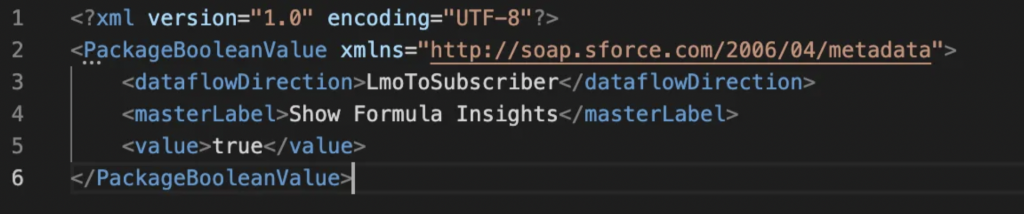

While ChatGPT generated FormulaInsightsMock and FormulaInsightsControllerTest classes in their entirety, FormulaInsightsController required some minor manual adjustments. As the FormulaDebugger application already uses elements like FMA Feature Flags (which allows me to enable or disable the OpenAI for specific subscriber orgs as needed — it’s one of the cool technologies available to ISVs and one of the general best practices for rolling out new features, but it’s not mandatory for this integration), it was more efficient to manually align the generated code with the existing codebase instead of teaching ChatGPT about the entire context required to support these features.

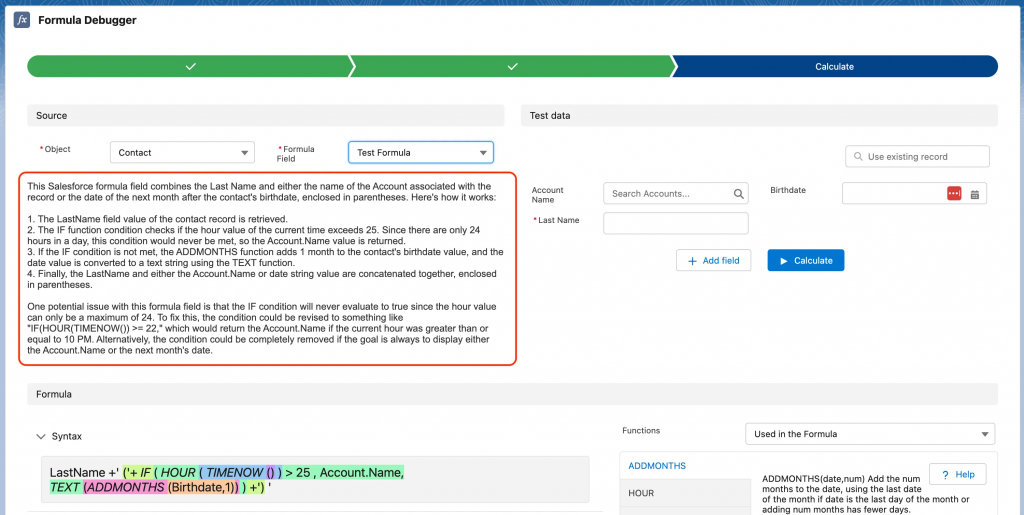

Lightning Web Component Integration with OpenAI

To display the insights provided by the OpenAI ChatGPT API on the frontend, a new Lightning Web Component (LWC) called formulaInsights was created. This LWC integrates with the FormulaInsightsController Apex class and handles the presentation of the insights within the FormulaDebugger application’s main screen.

Implementing getInsights

The formulaInsights LWC utilizes the getInsights method from the FormulaInsightsController Apex class to fetch the insights for a given formula. Once the insights are retrieved, the LWC processes the response and displays it in a user-friendly format on the frontend.

import { LightningElement, track, api } from 'lwc';

import getInsightsFromApex from '@salesforce/apex/FormulaInsightsController.getInsights';

import getConfiguration from '@salesforce/apex/FormulaFunctionsCtrl.getConfiguration';

export default class formulaInsights extends LightningElement {

_formulaContent;

configuration;

@track insightsText = '';

@api

set formulaContent(value) {

this._formulaContent = value;

this.getInsights();

}

get formulaContent() {

return this._formulaContent;

}

async connectedCallback(){

try {

this.configuration = await getConfiguration();

} catch (exception) {

console.error('FormulaFunctions Connected Callback exception', exception);

}

}

async getInsights() {

if (this.formulaContent && this.formulaContent.trim().length > 0 && this.configuration?.showFormulaInsights) {

try {

const response = await getInsightsFromApex({ formula: this.formulaContent });

const jsonResponse = JSON.parse(response);

const content = jsonResponse.choices[0].message.content;

this.insightsText = content;

} catch (error) {

this.insightsText = '';

console.error('Error with insights loading:', error.message);

}

} else {

this.insightsText = '';

}

}

}

To achieve this, the LWC leverages the wire service to make an asynchronous call to the getInsights method. It passes the necessary parameters, such as the formula content, and listens for updates to the response. When new insights are received, the LWC updates the component’s state to reflect the latest information.

<template>

<div class="slds-m-top_medium">

<p class="response-text">{insightsText}</p>

</div>

</template>

Displaying Insights on the Formula Debugger Main Screen

The formulaInsights LWC is designed to seamlessly integrate with the existing FormulaDebugger application’s main screen. When a user select a formula, the LWC fetches insights from the OpenAI API and presents them in an intuitive and visually appealing format alongside the formula. This allows users to better understand the formula they are working with and receive valuable insights from OpenAI directly within the application.

Getting Meaningful Responses from OpenAI

To extract the most valuable AI insights for our application using the OpenAI API, it’s essential to understand the roles of both Context and Command in our Custom Metadata.

Leveraging Context and Command

When sending a single API call, we create content by combining three elements: context, the user-selected formula, and the command specifying our request for OpenAI. The code responsible for preparing the content can be found in the FormulaInsightsController:

String content = integration.Context__c + ' '

+ String.escapeSingleQuotes(formula) + ' '

+ integration.Command__c;

The context is created similarly to the method described in this Medium article.

For example, the full content message might look like this:

You are Salesforce Expert and Certified Advanced Administrator. Explain what this salesforce formula field is doing, step by step, and how it works:

IF (ISBLANK (TEXT (Salutation)), ‘’, TEXT (Salutation) & ‘ ‘) & FirstName & ‘ ‘ & LastName

describe what can be an issue with this formula field and how to fix it

By keeping the context and command separately in custom metadata, ISVs have the opportunity to improve them over time. This allows for the delivery of enhancements via push upgrades when better prompts are discovered.

Fine-tuning Context and Command

To obtain the best AI insights for your application, it’s crucial to carefully craft the context and command. The context should provide enough background information to enable the AI to understand the scenario, while the command should be clear and concise, specifying the exact task you want the AI to perform.

By refining your context and command over time, you’ll be able to continually improve the quality of the AI-generated insights, thus enhancing the user experience within your application.

In conclusion, understanding the roles of context and command in Custom Metadata is essential for getting the most meaningful responses from OpenAI. Continually refining these elements will ensure that your application remains innovative and provides valuable insights to users.

Summary: A Seamless OpenAI Integration with AppExchange App

Integrating OpenAI’s ChatGPT with my AppExchange application, FormulaDebugger, proved to be a smooth and efficient process. The majority of the required code was generated by ChatGPT itself, enabling me to release an updated version of the FormulaDebugger app within just a few hours. The latest version is now available for free on AppExchange for users to enjoy.

It’s important to note that, depending on the information stored within your application, using OpenAI’s public endpoint may not be suitable for security reasons. There are alternative options available, such as hosting your private ChatGPT instance. Deciding on the best approach requires a thorough analysis of your specific requirements.

Get ChatGPT-powered hints on your ISV App Errors: Video Demo

Want to Connect? If you need assistance in leveraging the latest technologies for AppExchange applications or exploring how artificial intelligence can benefit your app, consider partnering with an Expert Product Development Outsourcer (PDO) like Aquiva Labs, where I’m lucky to work. We specialize in consulting services for ISV partners, helping them adapt to the AppExchange ecosystem and make their applications more innovative. If you’re interested in discussing the potential of AI for your application, please feel free to reach out to me at any time.

Case Study by Jakub Stefaniak

Vice President of Technology Strategy and Innovation at Aquiva Labs

Video Demo by Robert Sösemann

Principal ISV Architect at Aquiva Labs